Using Needle MCP Server in Open WebUI

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted AI platform. It supports various LLM providers like Ollama and OpenAI-compatible APIs and tools.

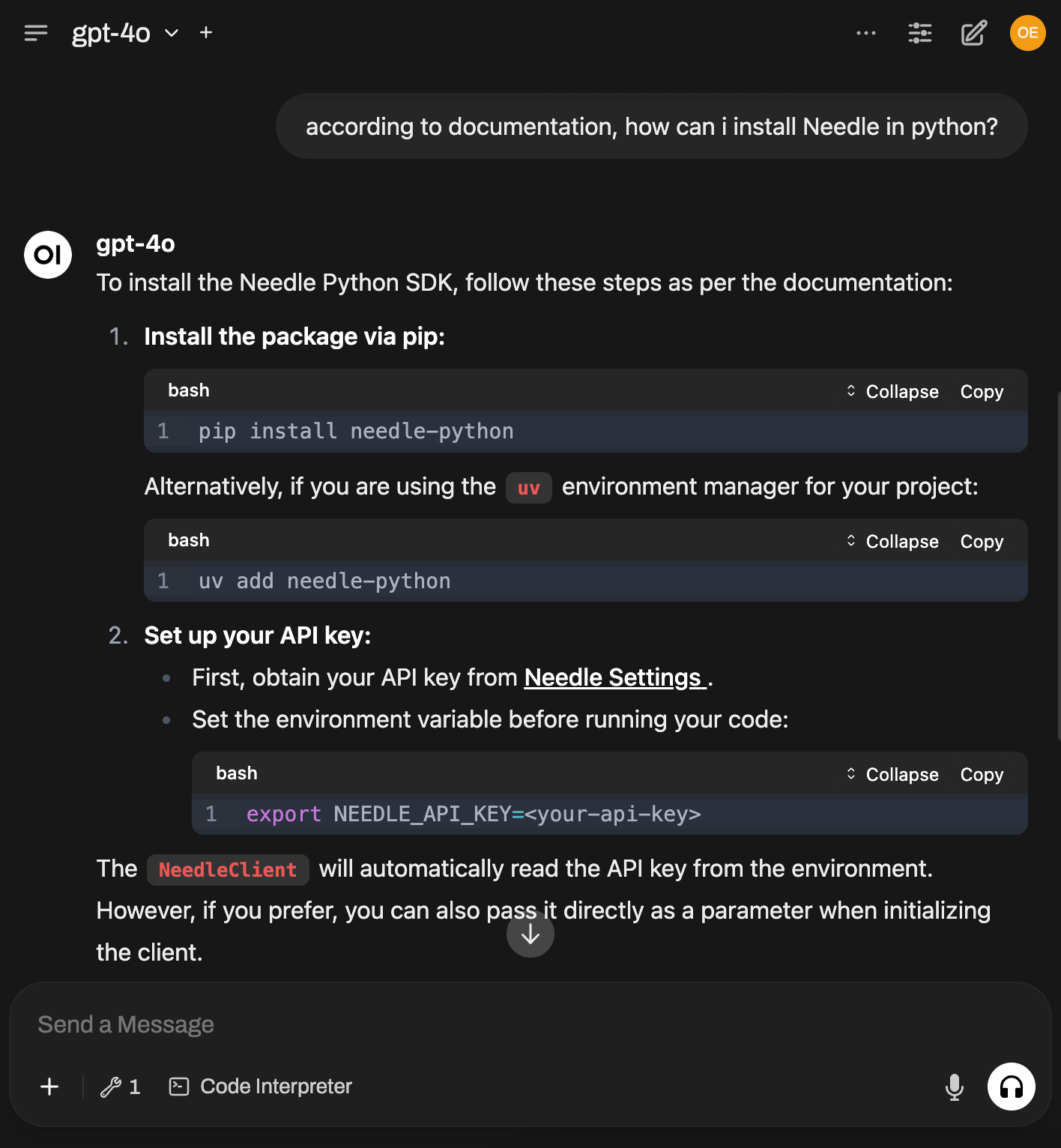

A must have tool for any AI-assistant is long-term memory. Also known as RAG (retrieval-augmented-generation) or semantic search.

It allows retrieving relevant context for the current conversation in a robust fashion. Answer accuracy improves immediately. Instead of generic responses, you get specific replies based on your knowledge base.

Let's see how you can enable long-term memory using Needle tools in your setup in a few steps. In this guide you will:

- Step 1: Start the Needle MCP Server

- Step 2: Connect the server to Open WebUI

- Final step: Test your integration

What You'll Need

Before we dive in, make sure you have:

- Open WebUI already running we'll assume you followed the official installation guide We use the following command to run it locally on our machines:

DATA_DIR=~/.open-webui uvx --python 3.11 open-webui@latest serveuvpackage manager installed on your systemnpm(Node.js) installed on your system- Needle API key, get one from the settings page

Don't worry if you're not a command line expert, basic experience is sufficient – I'll walk through each step clearly.

Step 1: Start the Needle MCP Server

Get Needle's Model Context Protocol (MCP) server running locally. This server will act as a bridge between Open WebUI and Needle's tools.

In your terminal run this command, replacing <needle-api-key> with your actual API key:

uvx mcpo \

--port 8000 \

-- npx mcp-remote https://mcp.needle.app/mcp \

--header 'Authorization:Bearer <needle-api-key>'What's happening here?

uvx mcpolaunches the MCP server locally--port 8000sets it to run on port 8000- The rest connects the local server to Needle's remote MCP service

You should see output indicating the server is starting up. Keep this terminal window open – the server needs to stay running for the integration to work.

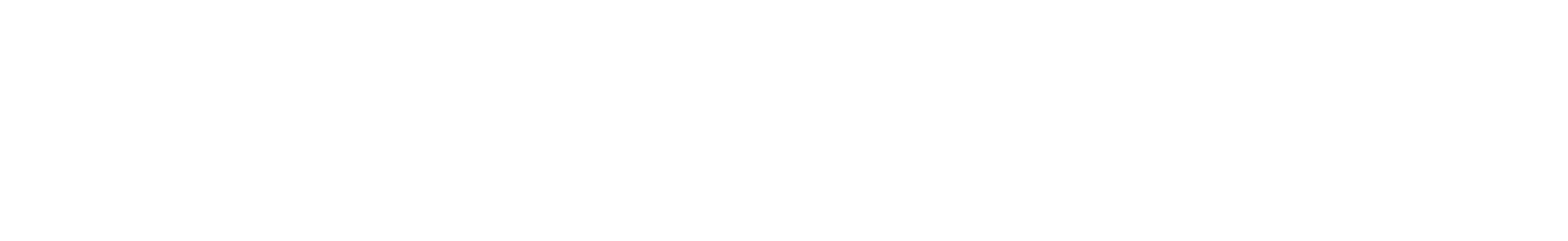

Verify the server is working

Before connecting to Open WebUI, let's make sure everything is working correctly. Open your web browser and navigate to: http://localhost:8000/docs

Perfect! You should see the Needle tools documentation page like below. This confirms that the MCP server is running and can communicate with Needle's services.

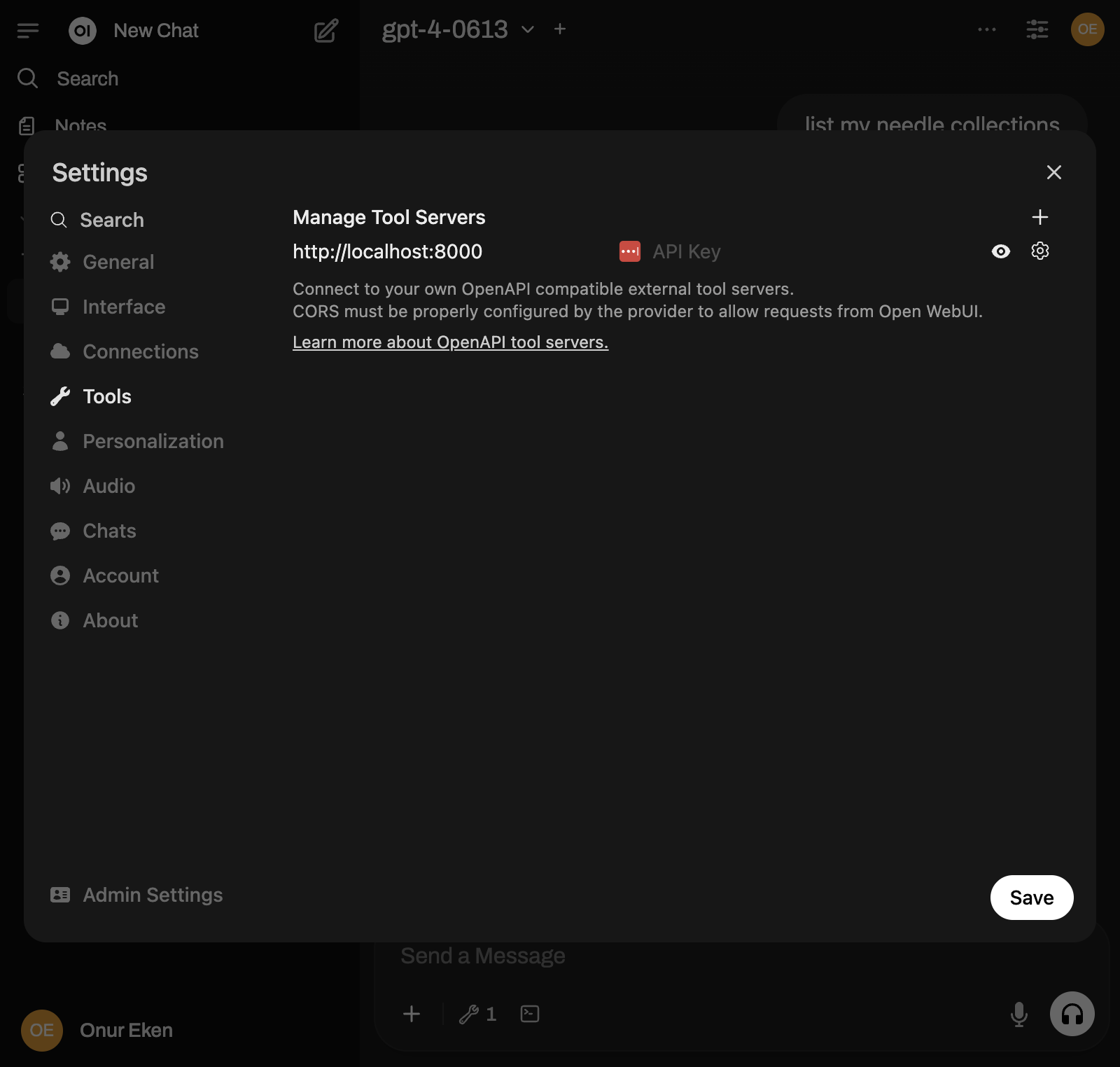

Step 2: Connect the Server to Open WebUI

Now for the exciting part – connecting everything together!

In your Open WebUI interface:

- Navigate to Settings (usually in the top-right corner)

- Go to Tools section

- Select Manage Tool Servers

- Click Add Connection

Fill in the connection details:

- URL: http://localhost:8000 (server we just started in the previous step)

- Authentication: Leave this empty (we already provided the API key to the server)

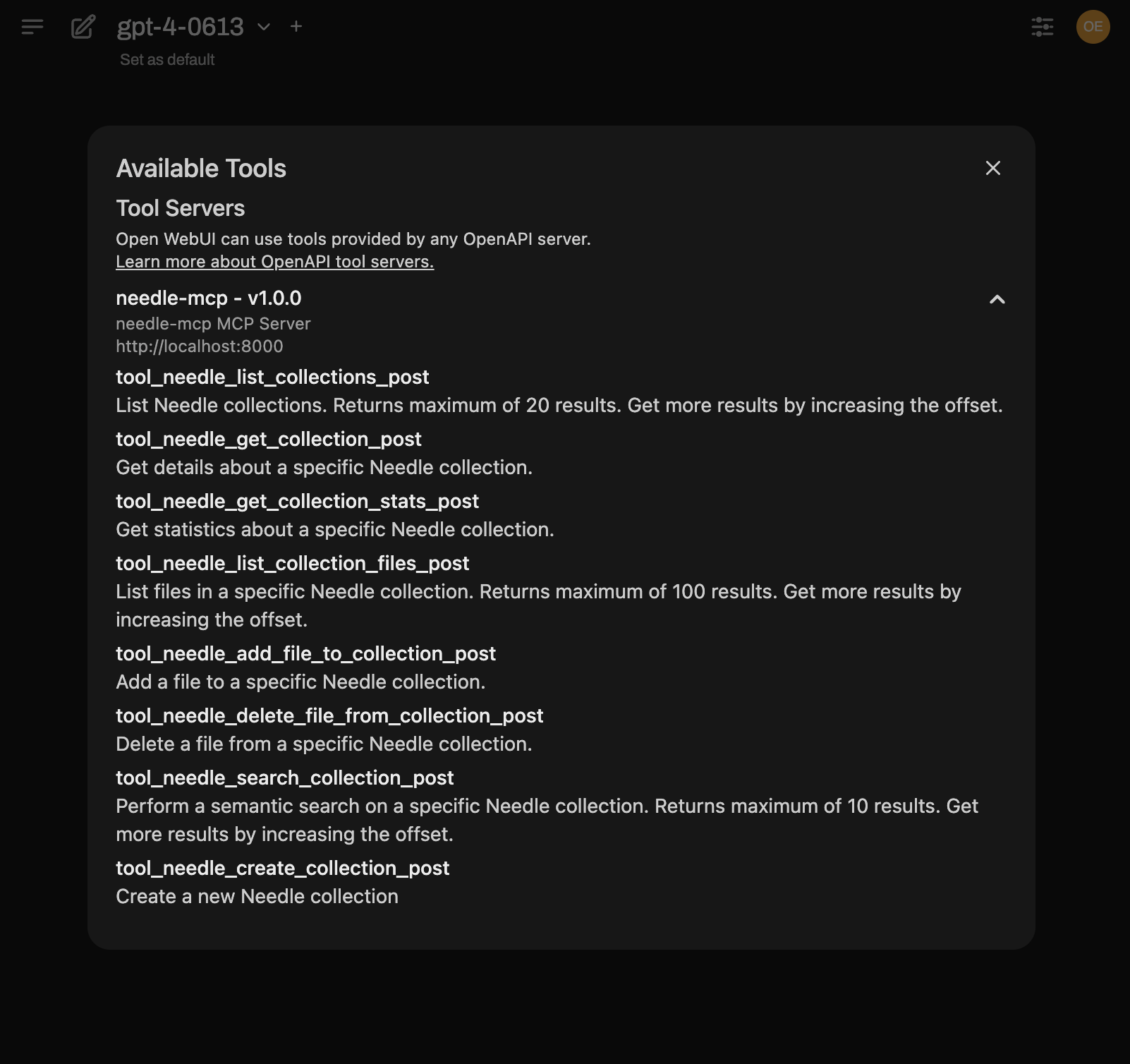

Step 3: Test Your Integration

Amazing! time to see if everything works.

Look at your chat input field in Open WebUI – you should now see Needle tools are available and enabled.

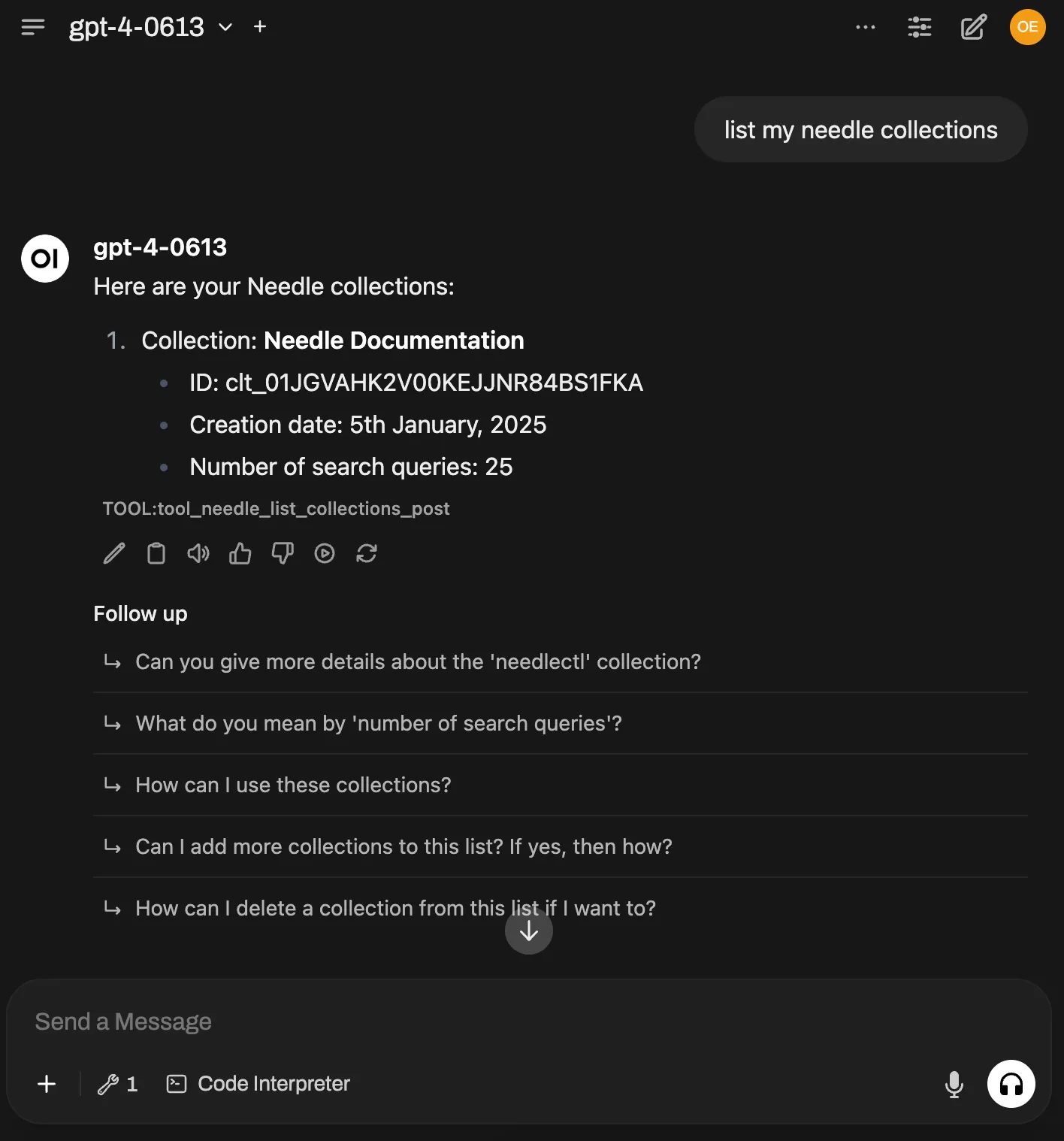

Let's test it with a simple command. In the chat, type: list my Needle collections.

If everything is connected properly, you should see a response showing your collections. This confirms that Open WebUI can successfully communicate with Needle's MCP server.

What's Next?

With Needle connected to Open WebUI, you can now:

- Search through your research collections directly from chat

- Organize and manage your knowledge base

The integration opens up powerful possibilities for research workflows. Experiment with different Needle tools to discover what works best for your use case.

Need help? The Needle and Open WebUI communities are great resources if you run into issues.

Troubleshooting

Server won't start?

- Make sure uv and npm are properly installed

- Check if another service is using port 8000

- Verify your API key is correct

OpenWebUI can't connect?

- Ensure the MCP server is still running

- Double-check the URL is exactly: http://localhost:8000

- Try refreshing your Open WebUI page

Tools not appearing?

- Refresh the Open WebUI page after adding the connection

- Check the browser console for any error messages